Kinetic by OpenStax

Duration | 1 year

Tools | Figma, usertesting.com, Google Analytics, After Effects

Role | Lead UX Designer

The Problem

Digital learning, including online learning, has become a key part of education, yet much remains unknown about how to facilitate student success in these learning environments. Although learning technologies promise to rapidly adjust to meet student and context demands, they can pose significant challenges to students and instructors if they are not grounded in principles of learning science and human-centered design.

To accelerate research initiatives and benefit from the translation of research into practice, we need to be able to conduct learning science and education research in an expedient and economical way, verify that findings are meaningful for the broad spectrum of contexts and populations that constitute the US education system, and leverage widely used digital platforms as testbeds for research.

For the digital teaching-learning experience to be most effective, learning tools and platforms should be equipped with strong research-backed features that support instructors and students alike.

Goals

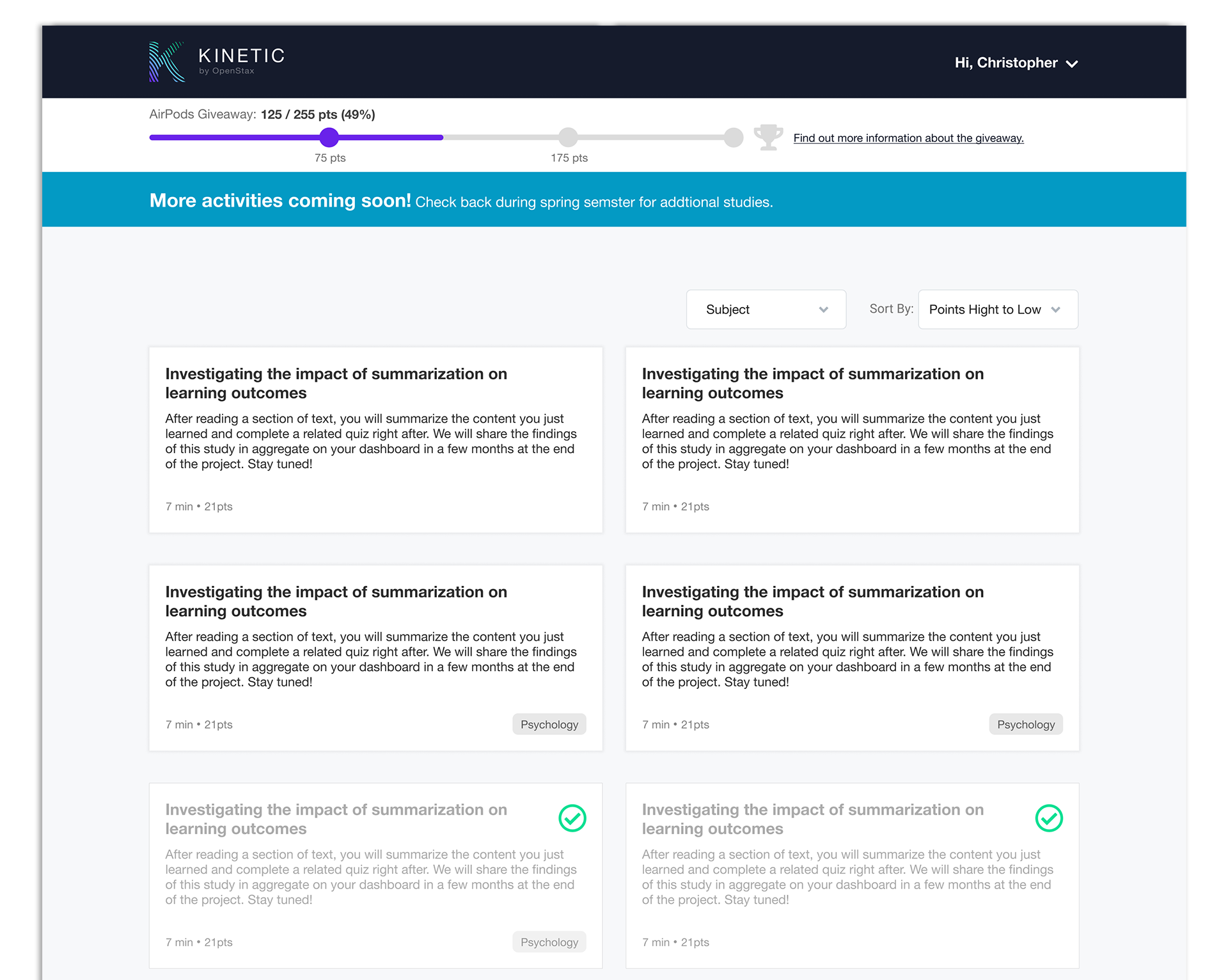

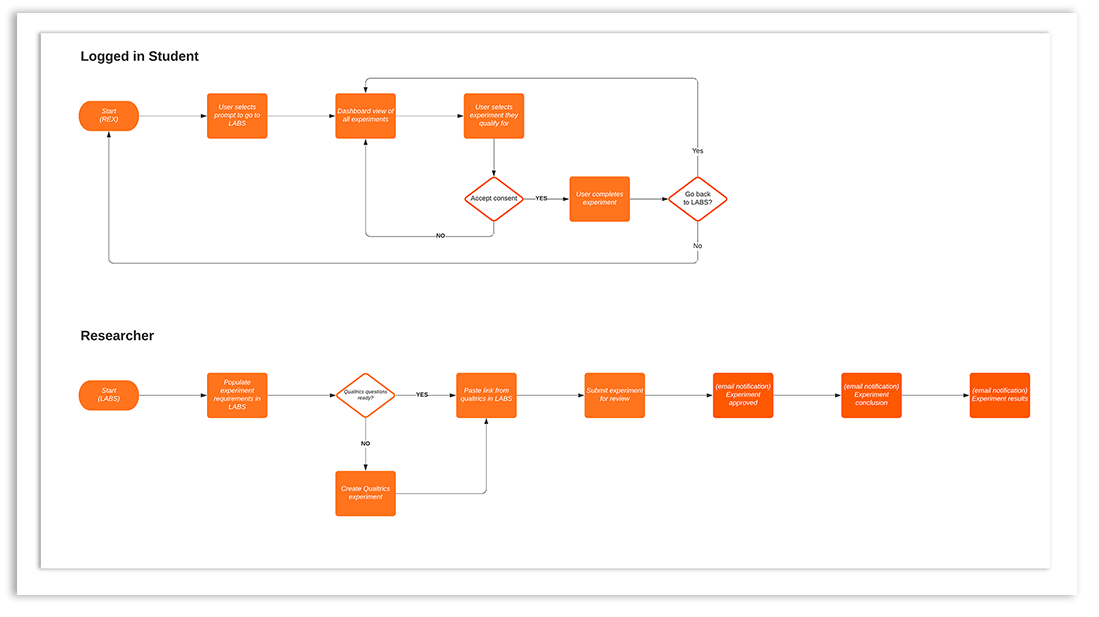

The goal was to create a lightweight research system integrated with the OpenStax platform where researchers can deploy a wide range of learning research studies at scale.

My Role

I was the UX/UI lead for this project. I also collaborated with developers, and research scientists to help gather requirements.

Research

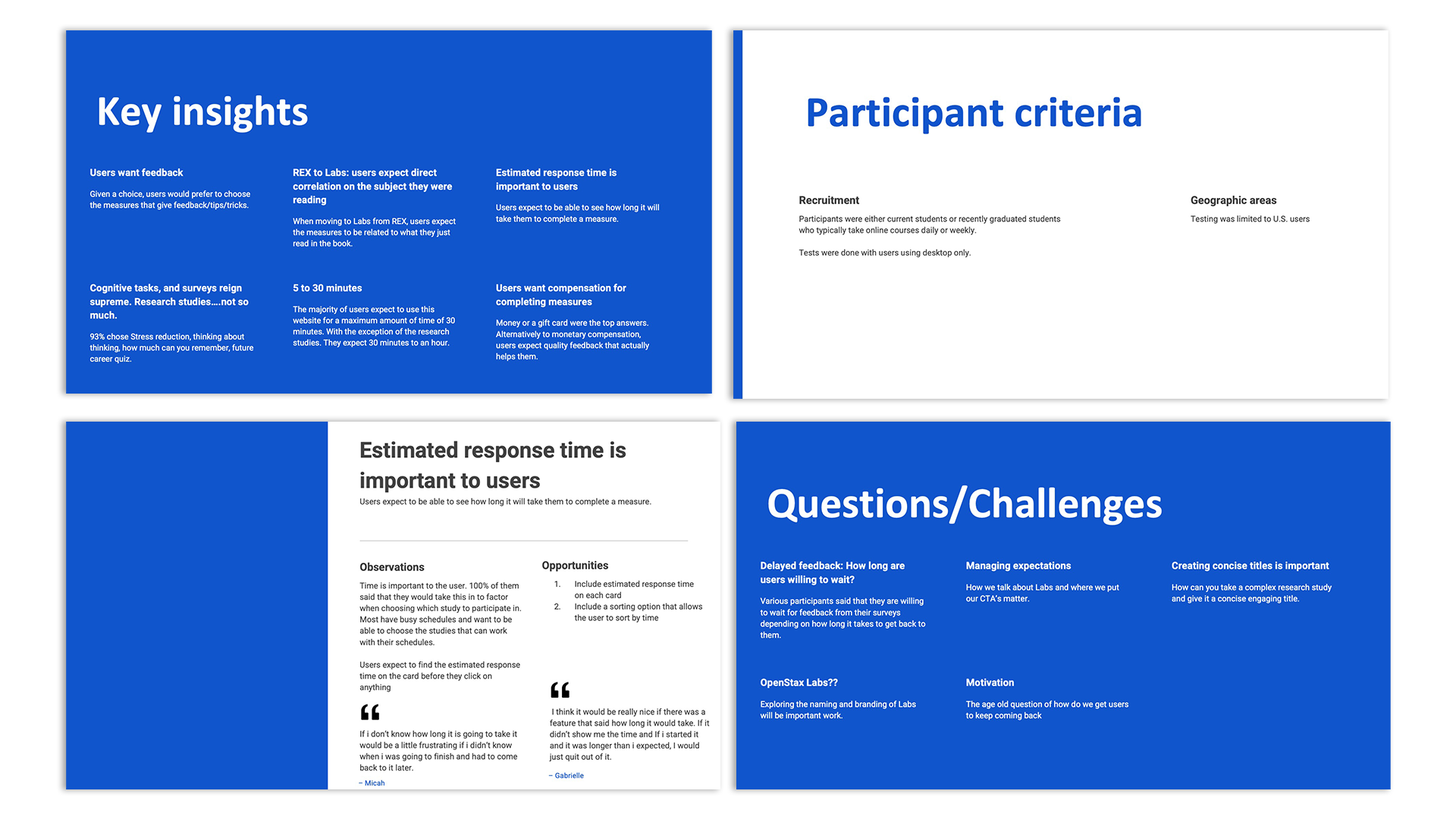

I kicked off this project with user interviews to understand what would motivate or incentivize students to participate in research on OpenStax Labs, and to assess interest in the product. We also planned on partnering with postsecondary institutions to maximize recruitment. With that in mind, I interviewed professors from these institutions to get am understanding on how they were planning on implementing our product into their workflow, so that I could start thinking about potential features to ease adoption.

Testing Testing Testing

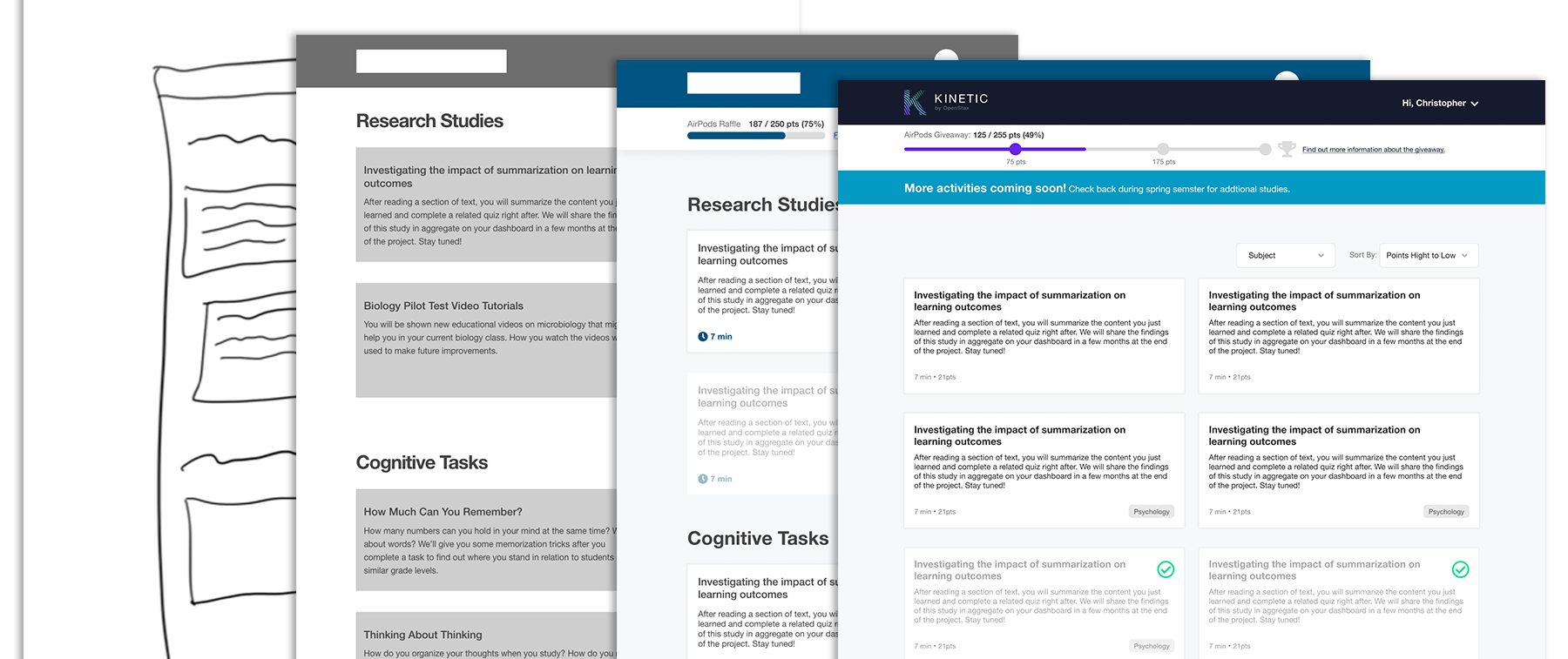

This project was new territory for us, so we embodied the “fail fast, fail often” approach early in the process. We started usability testing after initial low fidelity designs were created to validate ideas and to learn where to improve the flow. We found points in the flow that uncovered confusion from users and were able to adapt and continue testing until we got it right. A mixture of moderated and unmoderated tests were conducted using usertesting.com build, recruit, and facilitate the tests.

Iterations

No one ever gets it right the first time, and this project was no different. By analyzing the results from the tests and interviews, we continued to tweak the design and drop/add features that we felt was going to ensure that users had a great experience while also making sure our scope was going to be achievable by the deadline.

Takeaways

Overall, this was only the beginning of a long 5 year project. I was fortunate to be along for the initial conception and release of the Alpha version in year 1. I had the ability to work with many great team members from across the company, but by far the most impactful was being able to work with the research scientists on the core team. I was able to get feedback along the way and learn different techniques to improve how I crafted questions for tests and interviews to remove biases and get more detailed answers from users. Everyone involved was very passionate about creating a great product, and learning from one another, and that is what made the work exciting.